I’ve been doing a lot of thinking on pricing recently. Since launch we’ve tried a few different models and have learnt a lot. We also need to shift the pricing engine on-chain to make integrations easier and increase decentralisation.

Ideally we’d like a pricing (and capacity) mechanism that achieves the following:

-

Flexibility - pricing result is independent of capacity offered, so we can offer high price/high capacity and low price/low capacity. Bootstrapping of new covers can be difficult, as we have to reach material staking volume (50,000 NXM for min price).

-

Dynamic - pricing automatically increases when capacity is in short supply.

-

Simple - as simple and intuitive as possible, so that complicated risk models can be run off-chain with simple representations on chain.

-

Gas Efficient - to reduce gas costs as much as possible.

I’ll address the first two points in some more detail, as 3 and 4 are rather self explanatory.

Flexibility

Right now stakers provide one input (# of NXM staked) and the system needs two outputs, price and capacity. This means we are limited in the option space of price vs capacity as we have to fix the relationship via a price curve. What we need is the ability for stakers to signal what price they would like to offer capacity at.

This can be achieved by taking a weighted average of the price signal using stake as the weight but it opens up a potential issue where the staker can arbitrarily set a very high (or low) price to boost the weighted average price up (or down) exactly to their desired level.

Related to this, there are going to be an increasing number of cases where one, or relatively few, stakers are likely to set the price for a particular risk. For example, consider a particular business that would like to distribute its own white-labelled product on Nexus (eg earthquake cover) and wants to be able to set prices at 1km areas efficiently. We want the protocol to be able to cater for this future use case which is potentially very large.

Suggested Approach

Have two categories of stakers 1) regular and 2) long term aligned (LTA) stakers.

Regular stakers can choose price, but with restrictions, for example when staking they choose their price input as either up, down, or same. So they have some input into price setting but cannot arbitrarily shift things to precisely where they want without substantial weight (NXM staked).

LTA stakers have more freedom, within certain bounds (eg max price 100%, min price 1%) they can choose price as they see fit. We need a mechanism to ensure long term alignment, so to become and LTA staker you need to have NXM locked for a long time eg 12 months or more.

For our current products LA stakers aren’t actually required but it’s something we should be building the protocol for. Nexus requires diversification of risks to be successful, which means we need to handle the long tail of markets and we’d be quite foolish if we expect deep liquid staking markets on the long tail. The liquidity fragmentation is too great, so we need to build for a future where experts on particular business lines handle the majority of pricing.

Dynamic

The concept here is that supply/demand push prices up/down automatically, like yields on Compound/Aave. Conceptually this is quite appealing but it also suffers from the liquidity fragmentation issue described above. Supply/Demand can only fully drive price where liquidity is sufficient and this is unlikely to happen on the long tail of risks resulting in volatile or nonsensical prices. However, there is one area where dynamic pricing makes a lot of sense, when capacity on Nexus is near maximum for a particular risk, at this point the mutual should automatically charge more for it’s capacity.

Suggested Approach

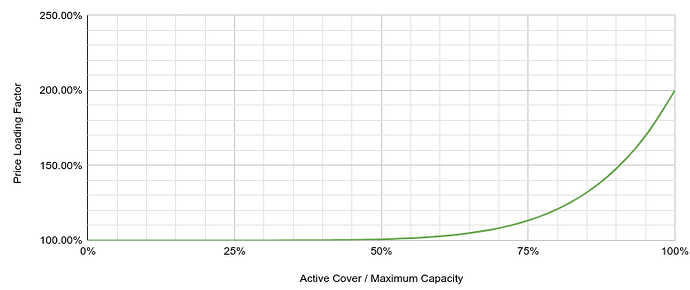

Apply a formulaic pricing loading to the price determined via staking when capacity on Nexus is near the global maximum. This should work like a bonding curve so that the loading is determined based on how much capacity is taken, rather than spot price.

Conceptually it would look like this:

Summary

After experimenting a lot with Gaussian curves and other more structured approaches, a simple weighted average price input vs stake is likely to be the most flexible and simple to implement. When combined with a pricing surge factor we can capture the benefits of dynamic supply/demand pricing where there is deeper liquidity, but not be hampered by the need for liquidity on the long tail.

Next steps:

- Work through details and scenarios in terms of sensitivity of up/down inputs.

- Confirm wether integration with current staking can work or if more ground up revamp of staking is required

- More detail on how the LTA group can be selected.