Hi all. First wanted to say thank you to Hugh for sharing the notes on the general direction for smart contract cover in this thread.

I wanted to follow up specifically on the cover pricing algorithm. It’s good to hear a new pricing model is under development, and I think it is useful to explore the problems with the current model openly with the community, such that any new ideas surfaced can be incorporated into the thinking around the new model.

The recent acceleration in DeFi bug exploits and Nexus’ claim payouts have resulted in losses on claims paid being greater than premiums earned. While the sample size is small, this suggests NXM holders are not adequately being compensated for the risk we are underwriting. As Hugh mentioned in the thread linked above, part of the reason for this is the sensitivity of the current model to staking, but there are additional factors worth considering as well.

If we assume all constants in the pricing algo stay fixed, we can simplify the pricing algorithm to the following:

![]()

Where the exponentiated value must be between 0.01 and 1, yielding a minimum cover cost of 1.3% for the least risky contracts and 130% for the most risky contracts.

Currently, most (if not all?) contracts are priced at 1.3% of the cover amount, implying that all contracts covered are equally risky, which we know is not the case.

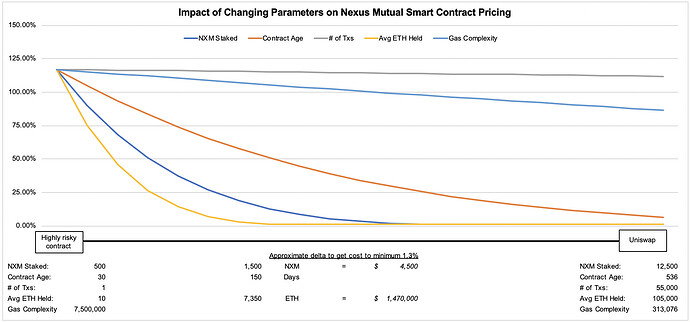

To illustrate graphically, here’s a chart that shows the change in price that corresponds to a change in each of these parameters alone, while keeping the rest fixed at their starting value. I chose the starting values to reflect what I would consider an extremely risky contract and the end values match those of Uniswap.

For context, the risky contract is 1 month old, has 500 NXM staked against it, has processed 1 transaction, holds an average of 10 ETH over the last 30 days, and is roughly 50% more complex based on gas units consumed on deployment than MakerDAO’s MCD.

Under the current pricing algorithm, staking an additional 1,500 NXM ($4,500 at $3/NXM) reduces the cost of cover to 1.3% – without changing any other parameters. Similarly, if the average amount of ETH in the contract were increased by 7,350 (almost $1.5m) that alone would also reduce the price to 1.3%. Gas complexity changes and # of transactions have a more limited impact.

This analysis highlights a few issues:

- Adding ETH to the contract doesn’t make it inherently less risky.

- Average ETH held by the contract can be gamed to lower the cost of cover (not sure exactly how Nexus measures this).

- It is way too cheap to stake and lower the price.

- A contract that gets older isn’t necessarily less risky. While you could argue that age implies the contract has avoided hacks so far, and thus is likely safer, an old contract with no ETH in it can all of a sudden start to get deposits and usage after a year – the algorithm would perceive this as a safe contract while obviously in that case it would not be.

- Number of txs is more reflective of use case than risk.

- Gas complexity is directionally correct but low gas doesn’t mean low risk. A simple contract can be written just as poorly as a complicated one, though of course it is more likely that there are bugs the more complex the code.

Hugh has said that a goal of the new pricing model will be to eliminate non-stake variables. I strongly support that idea, as each variable exposes an attack vector. This pushes all the burden of pricing onto risk assessors and means that if someone wants to dramatically reduce the cost of cover for a risky contract, they have to assume that risk by putting skin in the game and adding capital to the pool. It also makes it easier for the mutual to calibrate the pricing algorithm appropriately, something that is difficult to do with 5 variables of different weights.

Interestingly, a change to a model where only NXM stake is considered would also increase demand for NXM, thus helping to capitalize the mutual. Contracts that want to self-insure would have to acquire a decent NXM stake. Because of the mutual’s capital efficiency and pooled risk model, this NXM stake should still be significantly more attractive than self-insurance. This would also be a step towards putting a greater emphasis on Nexus Mutual’s other (often overlooked) product: NXM itself. A larger purchase of NXM could in theory be considered both an investment and a vehicle for self-insurance via a mutualized model.

A question for the community would be whether there is good reason to keep any of the other variables outside of NXM stake. I am in favor of simplicity, but if we were to keep any variables, my preference would be for it to be the product of contract age and avg ETH held, as these together could be viewed as a bug bounty that hasn’t been collected – a sign of relative security similar to the argument that Bitcoin is a $200B bug bounty that no one can figure out how to collect.

I’m sure I’m off on some of my assumptions here and these are just initial thoughts – Hugh & team are much closer to this so let me stop here and open the thread up to feedback and discussion.