Thanks @aleks ! Great post and it’s something I’ve been thinking about for a while as well.

Before getting into details it’s worth highlighting my broader goals for the pricing mechanic:

- Simplicity - bare minimum number of factors, that pushes complexity off-chain to risk assessors (to come up with complex risk models as they wish)

- Decentralisation - simple enough, and only reliant on on-chain info so it can be run entirely on chain (vs current off-chain quote engine)

- Flexible - ability to price not only smart contract cover but any type of risk, so we can roll-out new products quickly

Also, it’s worth noting that the pricing mechanic has to solve two problems:

- Price the Risk

- Determine how much capacity to offer on the risk

Right now pricing is based on the factors in the original post, and is supplemented by staking. Staking isn’t necessary to offer cover or a price. eg if nobody was staking on Compound, Nexus would still offer cover as Compound has been sufficiently “battle-tested”. However for new contracts staking is required and we’re seeing lots of potential demand here that requires staking to enable.

Note: this also plays into reward levels for staking, if staking isn’t actually required then rewards should be lower, but if staking is required then they need to be higher (currently this aspect isn’t differentiated in the reward mechanism)

Capacity is actually a bit more tricky to get right. We are trying to balance two items, a) using staking as a mechanism to indicate when it is worth while deploying the mutuals pool to a particular risk and taking advantage of the pooled liquidity model vs b) stopping the attack of staking a small amount on a buggy contract > taking a large cover > sacrificing the stake > get the claim payout.

To achieve all of the above goals we’ve come up with a new pricing framework, that is entirely based on staked NXM and doesn’t rely on anything else. We’re currently tweaking some parameters and doing some more testing, so will share more details soon. It’s also worth noting that our new pricing framework only really works with pooled staking, so we have to release that first.

Overview of New Pricing

Staked NXM is the only factor that influences both price and capacity.

Price

Simple linear interpolation between a max price (TBA ~25% pa?) and a min price (1.3%) based on how much is value is staked. Min price achieved once a certain amount of NXM value (in ETH terms) has been staked.

Capacity

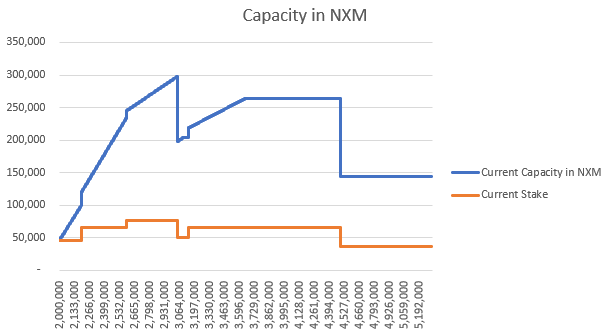

When someone stakes the capacity will be the staked NXM value to start with. This restricts the attack as loss of stake = claim payout. Then over time the mutual will release more capacity up to a maximum multiple of stake, eg 3x, which proxies for the battle-tested aspect.

If a stake is withdrawn all capacity, including the additional mutual pool capacity is immediately withdrawn. This allows the mutual to quickly respond to changes in risk, as if stakers withdraw, price should increase and capacity should be taken away.

Conceptually it looks something like this (block time on the x-axis):

A note on staking rewards:

Under this new model Risk Assessors are providing 25% of the capital/capacity (if we use a 3x factor) and 100% of the expertise (pricing is fully reliant on stake). Currently, Risk Assessors are providing a varying amount of capital, and quite often none is actually required, and also a varying amount of expertise (new protocols → lots, older protocols → not much).

Currently Risk Assessors get 20% of the cover cost, but in the new model the mutual is much more reliant on them to be successful. 25% of the capital and 100% of the expertise indicates rewards should be around 50% of cover cost.

We haven’t come to a view on the starting factors yet but hopefully this provides more insight on the structure and thinking behind our proposed next steps.